From deep learning to deep understanding: Hands-on introduction to Deep Learning interpretability

Raúl Benítez. Universitat Politècnica de Catalunya

Jordi Ollé. conceptosclaros.com

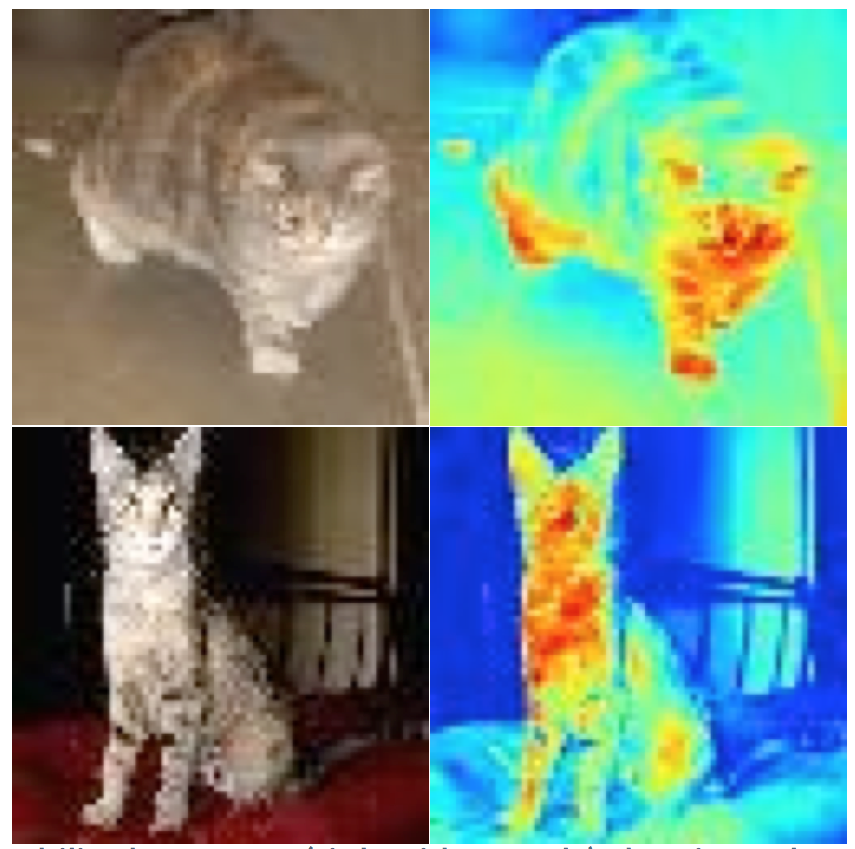

Abstract: Deep learning has consolidated as the leading approach to automatically recognize complex patterns in digital images. However, although DL models typically provide higher accuracy than traditional machine learning approaches using tailored features, the resulting procedure is difficult to interpret by experts in the field. The aim of this tutorial is to provide a brief introduction to deep learning interpretability methods providing visual explanations of convolutional neural networks. The tutorial will cover state-of-the art post-hoc approaches such as saliency maps, occlusion sensitivity, gradient-based class activation mapping (gradCAM) or Local-Interpretable Model Agnostic explanations (LIME).

Platform and codes: The course will be fully implemented using Python notebooks and the cloud platform Google Colaboratory https://colab.research.google.com/. Codes will be provided with an open GitHub repository, https://github.com/

Course duration: 3 hours

Course structure:

- Introduction to Deep Learning models and interpretability methods (1h presentation)

- Hands-on tutorial (2h):

- How a CNN Works: Feature maps

- Basic visual explanations

- Comparison and take-home messages